By Luke Duncalfe

Written for Toby Collett “Processing Vision“ exhibition at Window Gallery 17 Nov – 10 Dec 2005 http://www.window.auckland.ac.nz/

Like the depressed Marvin from the Hitchhiker’s Guide to the Galaxy to Tony Oursler’s screaming avatars, we recognise more of ourselves in the disorders of behaviour than in any representation of personality functioning as personality ought to. The pioneer robot by Toby Collett and the Robot Group is prone to error and lapses of judgment, and its perception is liable to exaggerate its position. Often the robot believes it has passed through a wall as its systems of response misfire. If this robot were a human it would be prone to hyperbole and fantasy.

I describe the robots that seem to understand this idea of errors and lapses of judgment as aiding the replication of personality as hailing from the paranoid android school of behavioural computing; they replicate personality through the embracing of the nativity of their bugs, memory leaks, and the poorly structured systems of their own code. They deny themselves the perfection that their Turing-completeness holds to promise.

We have a history of creating avatars of ourselves through our contemporary technology, though this is perhaps the first time that in seeing ourselves reflected within error prone technology we are identifying ourselves as being less than perfect. Once the operational mechanics of our hearts and circulatory systems were conceptualised through the mechanical workings of hydraulic clocks–you know, they both tick and all so it’s perfect–as one example[1]. And likewise, the BBC tends these days to see ourselves as possessing neurological circuitry akin to networked computing, with neurons being the equivalent of servers; the synapses a kind of Internet. The paranoid android then rests alongside a long history of our borrowing from mechanised automata in order to discover selfhood, of a degree of self-understanding that it seems can only be realised through looking to our artifacts, like an artist who makes sense of their identity through self portrait.

Collett’s robot crawls, surveys, guesses and responds as if possessing some low-level intelligence, its dimensions make it the size of a child’s toy and we tend to view it as such. Its achievements seem endearing, and its quirks make it all the more so. It feels deflationary to realise that for all its displays of spatial recognition, decision-making, of being a day-dreaming wanderer, it is numeric encoding that comprises its “understanding”. Its task is mechanically analogous to, say, the banality of word processing as far as a machine that has been set a computational assignment is concerned. For example, one-tenth of a second of spatial and motional awareness is logged thus:

#Position2D (4:0)

#xpos ypos theta speed sidespeed turn stall

2.698 4.153 4.67748 0.469 0 0.14 0

The spatial dimensions of the Window gallery are to the robot mere packets of data to be processed; awareness to the robot is homogenous binary whether it be colour, space, proximity, or the data structures of its own historical “memory”. This is what Manovich refers to when he describes there being two discrete layers to human-computer interaction that effectively exist in ultimate separation from the other[2]. If we allow ourselves to rationalise the mimicry of a behavioural machine as the achievements of a machine solely within its own numerical representation of physical dimensions then a degree of alienation occurs between a robot and oneself; what seemed culturally affirmative becomes only affirmative of the cultural-mechanical divide in the cognitive reality of the two.

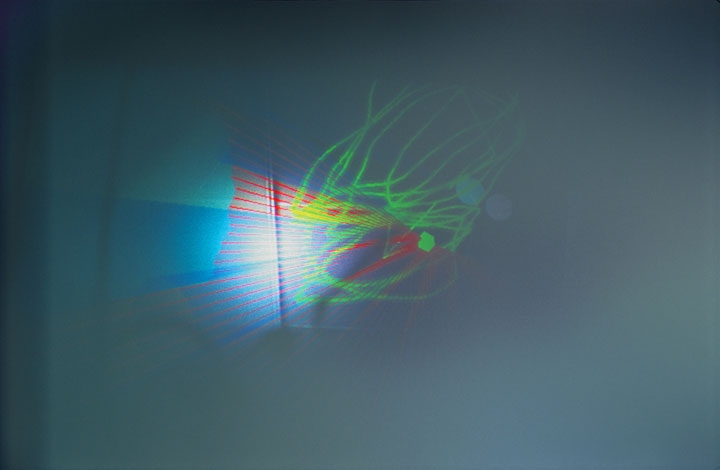

Yet this exhibition has been named Processing Vision. A merely metaphorical use of the word vision it seems at first given that vision to a computer-driven robot is meaningless beyond providing it with feedback to be harvested into binary, until one notes that the resultant vision provided by the projections in Window OnSite and the paths mapped on the pages of Window OnLine are not visions of sight but are in fact visions of data. They are pie graphs, lines of sonar range, x and y pathways of relayed spatial coordinates of Collett’s robot in the gallery, which provide insight into the processing means of the machine at the centre of the spew of information.

In these visions the processing is made transparent: We can see this isn’t an avatar with human-equivalent faculties of sight but it is instead being presented as a reaper of visual and spatial measurement from its trips around Window OnSite from which it can respond. As curator, Stephen Cleland has cast the robot as a topographical image-maker of the gallery. Through reading this proxy of space on screen we experience the data relationships of the gallery similar to how the robot does through its instrumentation, a kind of augmented spatial reality like the sight of the terminator robot of 1984 where graphical measurements and internal processing flashed up like tool tips.

It could be suggested that this arrangement forms a bridge between Manovich’s division by which we can relate to the vision of the robot through a data image devoid of a computationally-false version of spatiality. Due to glazing being applied to the window structure to obscure our view into the site, one understands the movements of the robot through its own computational understanding of its encounter with its environment. The priorities of Collett’s robot are based on measurement and so one interprets the projected and streamed corollaries of space on what approximates the robot’s own terms. Window’s two exhibition spaces, OnSite and OnLine, become screens to the robot’s thinking and interfaces from which to peer into the mechanics of robotic vision; the data sources and data outposts of a paranoid android.

1. Aram Vartanian, Man-Machine from the Greeks to the Computer, http://etext.lib.virginia.edu/cgi-local/DHI/dhi.cgi?id=dv3-17

2. Lev Manovich, The Language of New Media, p. 46